Visual search on the web has been around for some time.

In 2008, TinEye became the first image search engine to use image identification technology, and in 2010, the Google Goggles app allowed users to search the physical world with their phone cameras.

But in the last couple of years, visual search has come into new prominence, with companies like Pinterest and Bing developing into serious contenders in the visual search space, and search engines like Splash conceptualising new ways to search the web visually.

We now have an impressive range of visual search methods available to us: we can search with images, with part of an image, with our cameras, with paint on a digital canvas. And combined with applications in ecommerce, and recent advances in augmented reality, visual search is a powerful tool with huge potential.

So what can it do currently, and where might it develop in the future?

Then and now: The evolution of visual search

Although the technology behind image search has come on in leaps and bounds in the past few years, it's as a result of developments that have taken place over a much longer time period.

Image search on the web was around even before the launch of reverse image search engine TinEye in 2008. But TinEye claims that it was the first such search engine to use image identification technology rather than keywords, watermarks or metadata. In 2011, Google introduced its own version of the technology, which allowed users to perform reverse image searches on Google.

Both reverse image searches were able to identify famous landmarks, find other versions of the same image elsewhere on the web, and locate 'visually similar' images with similar composition of shapes and colour. Neither used facial recognition technology, and TinEye was (and still is) unable to recognise outlines of objects.

Google reverse image search in 2011. Source: Search Engine Land

Meanwhile, Google Goggles allowed users of Android smartphones (and later in 2010, iPhones and iPads) to identify labels and landmarks in the physical world, as well as identifying product labels and barcodes that would allow users to search online for similar products. This was probably the first iteration of what seems to be a natural marriage between visual search and ecommerce, something I'll explore a bit more later on.

The Google Goggles app is still around on Android, although the technology hasn't advanced all that much in the last few years (tellingly, it was removed as a feature from Google Mobile for iOS due to being “of no clear use to too many people”), and it tends to pale in comparison to a more modern 'object search' app like CamFind.

You tried, Goggles.

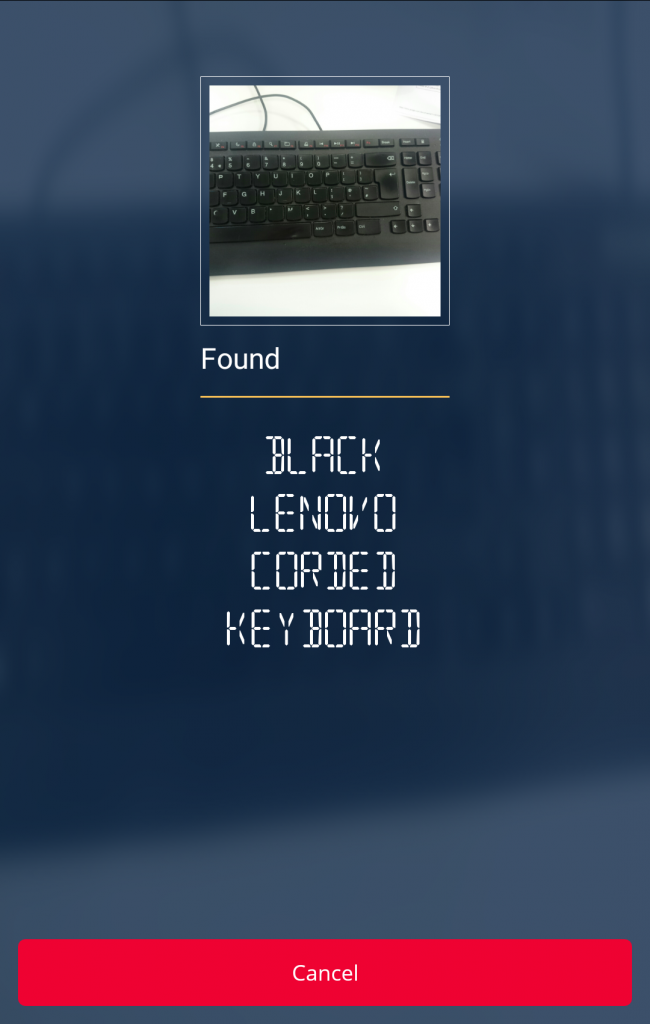

CamFind is a visual search and image recognition mobile app that was launched in 2013, and while it doesn't appear to be able to solve Sudoku puzzles for you, it does have an impressive rate of accuracy.

Back when Google Glass was still a thing, Image Searcher, the startup behind CamFind, developed a version of the app to bring accurate visual search to Google Glass, activated by the command “OK Glass, what do you see?” This is the kind of futuristic application of visual search that many people imagined for a technology like Google Glass, and could have had great potential if Google Glass had caught on.

The CamFind mobile app has an impressive accuracy rate, even down to identifying the brand of an object.

When the 'pinboard'-style social network Pinterest launched in 2012, it was a bit of a dark horse, gaining huge popularity with a demographic of young-to-middle-aged women but remaining obscure in most conventional tech circles. Even those who recognised its potential as a social network probably wouldn't have guessed that it would also shape up into a force to be reckoned with in visual search.

But for Pinterest, accurate visual search just makes sense, as it allows Pinterest to serve relevant Pin recommendations to users who might be looking for something visually similar (say, the perfect copper lamp to light their living room) or hone in on the specific part of a Pinned image that interests them.

In 2014, Pinterest acquired VisualGraph, a two-person startup which was cofounded by one of Google's first computer vision engineers, bringing the company's visual search know-how into the fold. In the same year, it introduced and began refining a function that allowed users to highlight a specific part of a Pin and find other Pins that are visually similar to the highlighted area – two years ahead of Bing, who only introduced that functionality to its mobile image search in July 2016.

Bing has pipped Pinterest to the post by introducing visual searching with a smartphone camera to its native iOS app (I can't comment on how accurate it is, as the Bing iOS app is only available in the US), something that Pinterest is still working on launching. But it's clear that the two companies are at the vanguard of visual search technology, and it's worth paying attention to both to see what developments they announce next.

Meanwhile, Google is yet to offer any advance on Google Goggles for more accurate searching in the physical world, but you can bet that Google isn't going to let Pinterest and Bing stay ahead of it for too long. In July, Google announced the acquisition of French startup Moodstocks, which specialises in machine learning-based image recognition technology for smartphones.

And at Google I/O in May, Google's Engineering Director Erik Kay revealed some pretty impressive image recognition capabilities for Google's new messaging app, Allo.

“Allo even offers smart replies when people send photos to you. This works because in addition to understanding text, Allo builds on Google's computer vision capabilities to understand of the content and the context of images. In this case, Allo understood that the picture was of a dog, that it was a cute dog, and even the breed of the dog. In our internal testing, we found that Allo is 90% accurate in determining whether a dog deserves the ”cute dog” response.”

Visual search and ecommerce: A natural partnership

How many times have you been out and about and wished you could find out where that person bought their cool shoes, or their awesome bag, without the awkwardness of having to approach a stranger and ask?

What if you could just use your phone camera to secretly take a snap (though that's still potentially quite awkward if you get caught, let's be honest) and shop for visually similar search results online?

Ecommerce is a natural application for visual search, something which almost all companies behind visual search have realised, and made an integral part of their offering. CamFind, for example, will take you straight to shopping results for any object that you search, creating a seamless link between seeing an item and being able to buy it (or something like it) online.

Pinterest's advances in visual search also serve the ecommerce side of the platform, by helping users to isolate products that they might be interested in and smoothly browse similar items. An 'object search' function for its mobile app would also be designed to help people find items similar to ones they like in the physical world on Pinterest, with a view to buying them.

With the myriad possibilities that visual search holds for ecommerce, it's no surprise that Amazon has also thrown its hat into the ring. In 2014, it integrated a shopping-by-camera functionality into its main iOS app (and has since released the function on Android), and also launched Firefly, a visual recognition and search app for the Amazon Fire Phone.

Even after the Fire Phone flopped, Amazon refused to give up on Firefly, and introduced the app to the more affordable Kindle Fire HD. The visual search function on its mobile app works best with books, DVDs and recognisably branded objects, but it otherwise has a good rate of accuracy.

Amazon's visual search in action.

Other companies operating in the cross-section of visual search and ecommerce which have emerged in the past few years include Slyce, whose slogan is “Give your customer's camera a buy button”, and Catchoom, which creates image recognition and augmented reality tools for retail, publishing and other sectors.

Although searching the physical world has yet to cross over into the mainstream (most people I've talked to about it aren't even aware that the technology exists), that could easily change as the technology becomes more accurate and increasingly widespread.

But ecommerce is only one possible application for visual search. What other uses and innovations could we see spring up around visual search in the future?

The future of visual search?

Aside from the fairly obvious prediction that visual search will become more accurate and more widespread as time goes on, I can imagine various possibilities for visual search going forward, some of which already exist on a small scale.

The visual recognition technology which powers visual search has huge potential to serve as an accessibility aid. Image Searcher, the company behind CamFind, also has an app called TapTapSee which uses visual recognition and voiceover technology to identify objects for visually impaired and blind mobile users. Another app, Talking Goggles, performs the same function using Google Goggles' object identification technology.

Although these are purely recognition apps and not search engines as such, Image Searcher have used a great deal of the feedback they receive from the visually impaired community to integrate the same features into CamFind. It's easy to imagine how the two concepts, if developed in tandem, could be used to provide a truly accessible visual search to visually impaired users in the future.

And if camera-based visual search were combined with recent advances in voice search and natural language processing, it's possible to imagine a future in which the act of searching visually becomes virtually interface-free. Sundar Pichai, CEO of Google, demonstrated a very similar capability at Google I/O when he showed off Google's new voice assistant, Google Assistant.

“For example, you can be in front of this structure in Chicago and ask Google, ”Who designed this?” You don't need to say ”the bean” or ”the cloud gate.” We understand your context and we answer that the designer is Anish Kapoor.”

In this example, the unstated context for Pichai's question “Who designed this?” is likely provided by location data, but it could just as easily be visual input, provided by a smartphone camera or an improved Google Glass-like device.

I mentioned something called Splash earlier on in this article. Splash, a search interface developed by photo community 500px, is a different type of visual search than any we've looked at so far. The interface is designed to allow users to visually search 500px's image library using colour, digitally 'splashing' the paint onto a canvas.

As far as visual search engines go, Splash is more of a fun novelty than a practical search tool. You can only search for images in one of five categories – Landscape, People, Animals, Travel and City – so if you want a picture of something that doesn't come under one of those, good luck to you. The search results also tend to respond more to which colours are on the canvas than to what you're trying to depict with it.

Not really what I was after…

Even so, I like the different take that Splash gives on searching visually, and I think that the idea has a lot of interesting potential if it were developed and refined more. Other types of visual search that we've talked about so far depend on having a picture or an object to hand, but what if you wanted to search for something you knew how to draw, but didn't have an example of to hand?

Another thing I would find incredibly useful in my work as a journalist (where I'm often called upon to source stock images) would be the ability to search for a visual concept.

Say I'm looking for a picture to represent 'email ROI' for a piece I'm writing. It would be really helpful if I could run a visual search for any images which combined visuals relating to email, and visuals relating to money, in some way. Maybe a keyword-based search could get close to what I need, but I think a visual search would be able to cast a wider, and more useful, net.

Finally, if developing visual search continues to be a priority for companies like Pinterest, Bing and Google, I think the most natural evolution of the technology would be to incorporate augmented reality. AR is already advancing into the mainstream – not just with Pokémon Go, but apps like Blippar which fuse AR with visual discovery and visual search to add an extra dimension to the world around us.

There's clear potential for this to become a fully-fledged search phenomenon, say with text overlays providing information about objects you want to search for, and the ability to interact with items and purchase them, removing even more friction from ecommerce and enabling users to buy things in the moment of inspiration.

I don't foresee visual search replacing the text-based variety altogether (or at least, not for a very long time). But does opens up a world of exciting new possibilities that will play a big part in whatever's to come for search in the future.

No comments:

Post a Comment